Uber’s Self-Driving Cars Are Already Endangering Pedestrians, Bicyclists

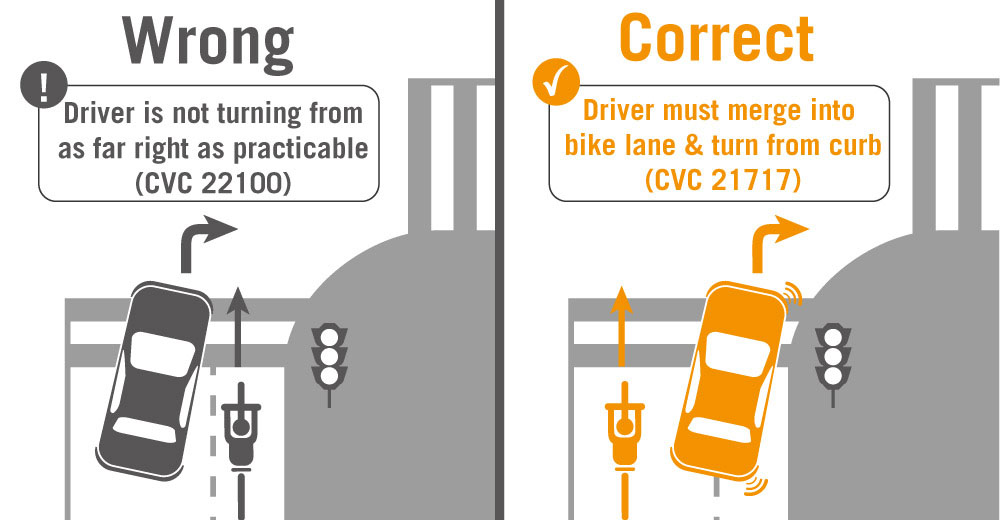

Uber’s self-driving cars don’t follow traffic rules when turning right. Image: San Francisco Bicycle Coalition

Stay in touch

Sign up for our free newsletter

More from Streetsblog California

Bill to Require Speed Control in Vehicles Goes Limp

Also passed yesterday were S.B 961, the Complete Streets bill, a bill on Bay Area transit funding, and a prohibition on state funding for Class III bikeways.

‘We Don’t Need These Highways’: Author Megan Kimble on Texas’ Ongoing Freeway Fights

...and what they have to teach other communities across America.

Wednesday’s Headlines

Brightline LA-to-Vegas promises quick construction; LA Metro buses set to test camera enforcement of bus lane obstruction; Why are we still knocking down houses to build freeways? More

Brightline West Breaks Ground on Vegas to SoCal High-Speed Rail

Brightline West will be a 218-mile 186-mile-per-hour rail line from Vegas to Rancho Cucamonga - about 40 miles east of downtown L.A. - expected to open in 2028

CalBike Summit to Advocates: Don’t Take No for an Answer

"Persistence with kindness." "Keep trying different things." "You have to be kind of annoying." "Light up their phones."