Uber’s Self-Driving Cars Are Already Endangering Pedestrians, Bicyclists

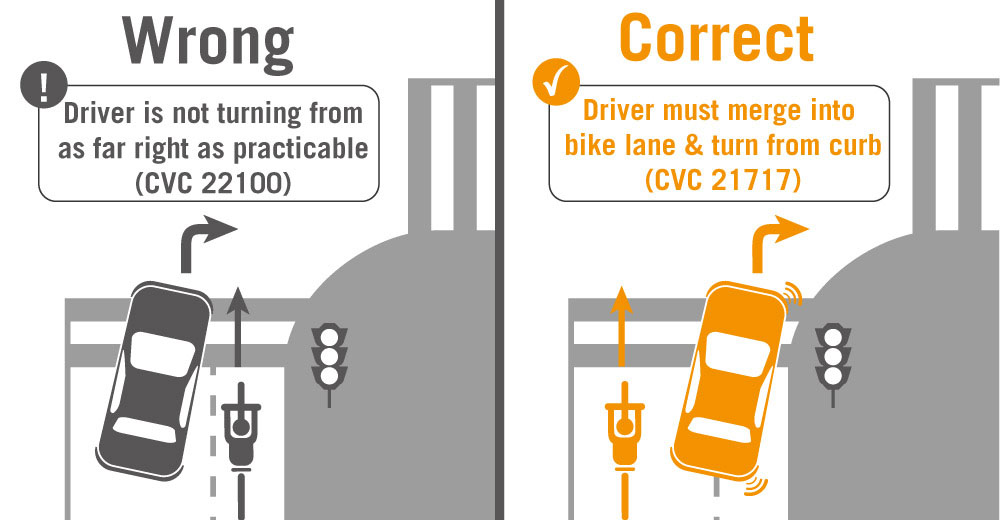

Uber’s self-driving cars don’t follow traffic rules when turning right. Image: San Francisco Bicycle Coalition

Stay in touch

Sign up for our free newsletter

More from Streetsblog California

Thursday’s Headlines

CA youth speak out on climate: How LA Metro plans to spend $ from canceled 710 freeway; Watch out, illegal SF parkers, tickets are coming; More

Legislators Tackle AV, School Zone Safety

Are AVs freight trucks ready to be deployed on California roads with no one in them?

Metro Looks to Approve Torrance C Line Extension Alignment

Selecting the relatively low-cost hybrid alternative should help the oft-delayed South Bay C Line extension move a step closer to reality

What to Say When Someone Claims ‘No One Bikes or Walks in Bad Weather’

Yes, sustainable modes are more vulnerable to bad weather. But that's why we should invest more in them — not less.

Wednesday’s Headlines

Road project leaves Half Moon Bay residents without access; Kern County residents concerned about a carbon capture plan; Who works from home in the Bay Area? More